Slides 📊

5.7. The Poisson Distribution

While the binomial distribution helps us count successes in a fixed number of trials, many real-world situations involve counting rare events that occur randomly over time or space. Think about counting phone calls to a help desk during an hour, defects in a length of computer tape, or radioactive particles emitted by a radioactive substance. These scenarios share a structure that leads us to another fundamental distribution in statistics: the Poisson distribution.

Road Map 🧭

Identify situations where the Poisson distribution applies.

Master the Poisson probability mass function and its single parameter \(\lambda\).

Derive the Poisson expected value and variance using infinite series techniques.

Understand how the interval length affects the parameter \(\lambda\).

5.7.1. From Counting Events to Modeling Rates

The Poisson distribution emerges when we count events that occur randomly over continuous intervals of time, space, or volume. Unlike a binomial experiment which involves a fixed number of trials, the number of events in a Poisson process is theoretically unlimited.

What Makes a Process Poisson?

A Poisson process has three essential properties:

1. Stationary and Proportional

The probability that an event occurs in any interval depends only on the length of that interval, not on where the interval occurs (stationarity).

Equal-sized intervals have the same probability distribution, and longer intervals have proportionally higher probabilities of containing events.

For example, suppose we’re counting phone calls to a help desk that has a constant call rate. The probability of receiving exactly one call should be the same whether we look at 9-10 AM or 2-3 PM. Furthermore, if we expect 3 calls per hour on average, we should expect 6 calls per two-hour period.

2. Independent Events

Individual events occur independently of each other. The occurrence of one event doesn’t influence when the next event will happen. Additionally, the number of events in non-overlapping intervals are independent of each other.

In our phone call example, receiving three calls between 9-10 AM doesn’t affect the number of calls received between 10-11 AM.

3.Orderliness (no bunching)

Events cannot occur simultaneously. In sufficiently small intervals, the chance of two or more events occurring is negligible. Events effectively arrive one at a time.

Examples of Poisson Distributions

Several real-world scenarios approximately follow the rules of Poisson processes:

Radioactive decay: The number of alpha particles emitted from uranium-238 in one minute

Call centers: The number of calls received during busy hours on any given day

Quality control: The number of flaws on a computer tape of fixed length

Ecology: The number of dead trees in a square mile of forest

Traffic: The number of accidents at an intersection per month

5.7.2. The Poisson Distribution

When events follow a Poisson process, we model the count of events using a Poisson distribution.

Definition

A Poisson random variable \(X\) counts the number of independently occurring events in a fixed interval, where events occur at some average rate \(\lambda\) (lambda) per interval.

When \(X\) has a Poisson distribution, we write \(X \sim \text{Poisson}(\lambda)\).

Probability Mass Function

The Poisson PMF gives the probability of observing exactly \(x\) events:

for \(x \in \text{supp}(X) = \{0, 1, 2, 3, ...\}\).

Notice several key features of this distribution:

Single parameter: Unlike the binomial distribution which has two parameters (\(n\) and \(p\)), Poisson has only one parameter \(\lambda\).

Unbounded support: A Poisson random variable can theoretically take any non-negative integer value, unlike binomial whose support is bounded above by \(n\).

5.7.3. Expected Value and Variance: The Power of \(\lambda\)

One of the most elegant features of the Poisson distribution is that its parameter \(\lambda\) completely determines both the center and spread of the distribution.

Expected Value

To find \(E[X]\) for a Poisson random variable, we use the definition of expected value:

Since the first term equals zero with \(x = 0\), the summation effectively begins at \(x = 1\). Then in each term, we can cancel \(x\) with the \(x\) contained in \(x!\) of the denominator. Since \(e^\lambda\) does not depend on \(x\), we can bring this outside the summation:

Substituting \(u = x - 1\), we get:

The sum is the Taylor series for \(e^λ\). Therefore:

Variance

For the variance, we use \(\text{Var}(X) = E[X^2] - (E[X])^2\). We already know \(E[X] = \lambda\), and we need \(E[X^2]\):

Through similar algebraic manipulation (starting at \(x = 1\), canceling factorials, and using substitutions), we can show:

Therefore:

Summary of Poisson Distribution Properties

For \(X \sim Poisson(\lambda)\):

The summary reveals a remarkable property of Poisson distribution; knowing \(\lambda\) tells us everything about the distribution’s location and spread.

5.7.4. Visualizing Poisson Distributions

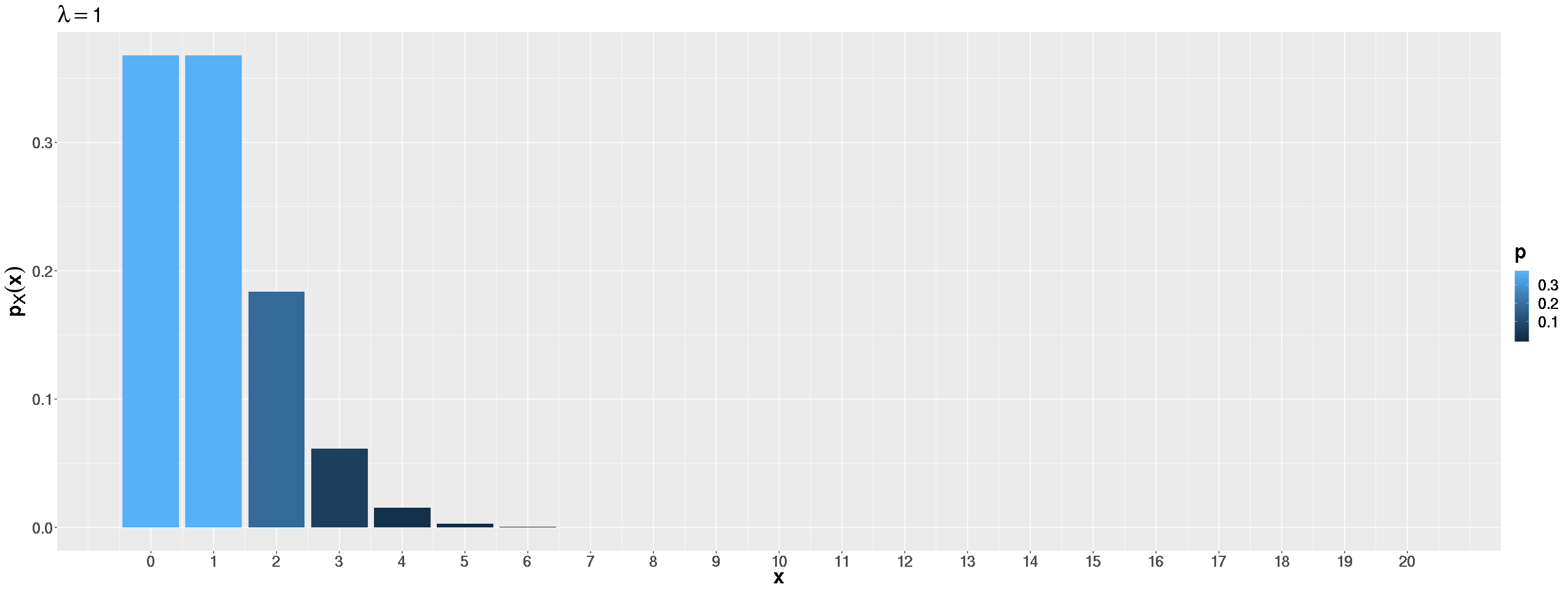

Small \(\lambda \, (\lambda=1)\)

When events are rare, most probability concentrates at 0 and 1, with a long right tail. The distribution is highly right-skewed.

Fig. 5.14 \(\lambda=1\)

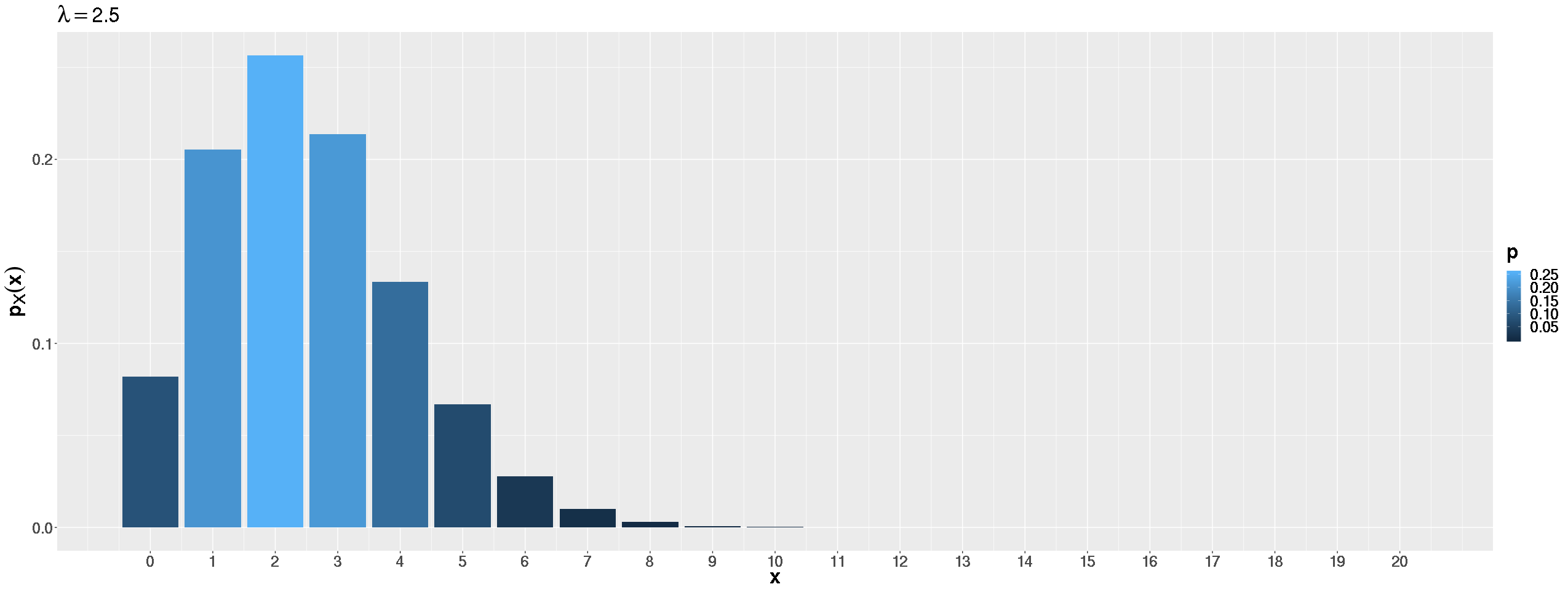

Moderate \(\lambda \, (\lambda=2.5)\)

As \(\lambda\) increases, the mode shifts right and the distribution becomes less skewed. More probability spreads across multiple values.

Fig. 5.15 \(\lambda=2.5\)

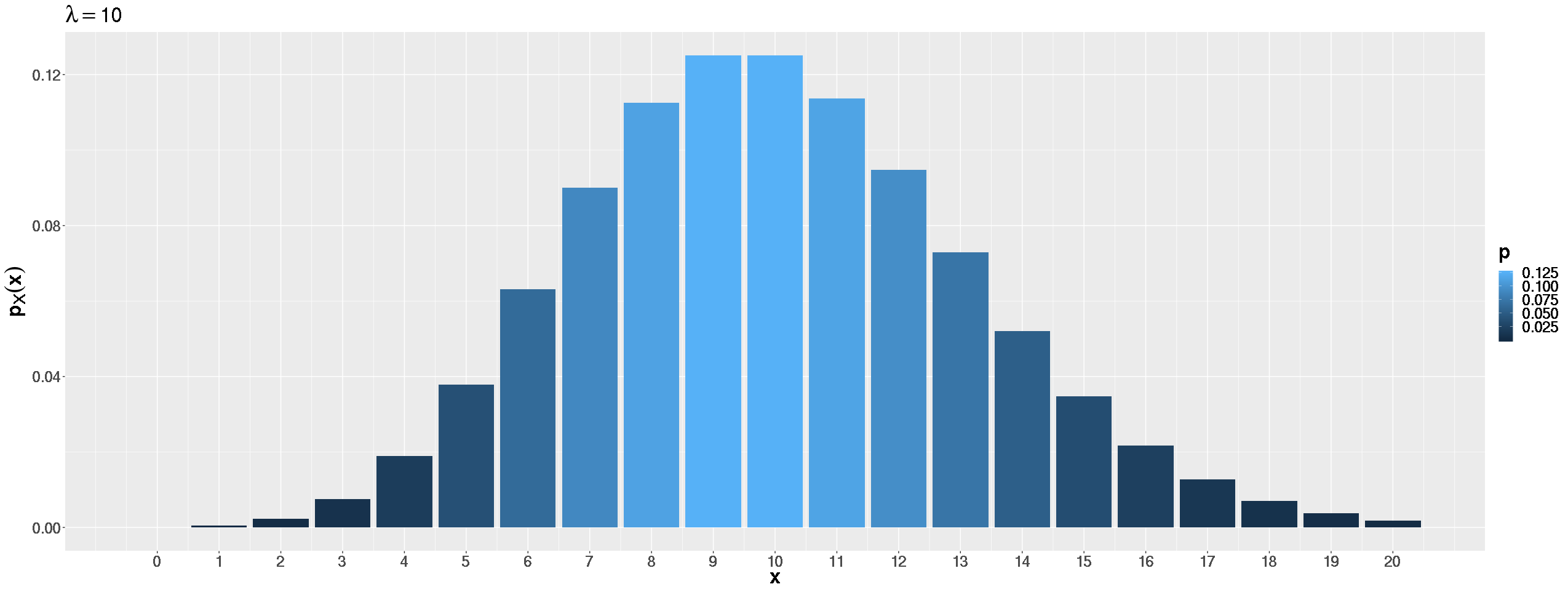

Large \(\lambda \, (\lambda=10)\)

For larger \(\lambda\) values, the distribution approaches a symmetric, bell-shaped curve centered around \(\lambda\). The resemblance to a normal distribution becomes quite strong.

Fig. 5.16 \(\lambda=10\)

Example💡: IT Consultant Call Analysis

An IT consultant receives an average of 3 calls per hour. We want to model the number of calls using a Poisson distribution and answer several probability questions.

Setting Up the Model

Let \(X\) count number of calls the consultant receives in the next hour.

Stationary and Proportional: Call rate is constant over time. ✓

Independent: One call doesn’t influence when the next occurs. ✓

Orderliness: Calls don’t occur simultaneously. ✓

Therefore: \(X \sim \text{Poisson}(\lambda = 3)\).

Solving Probability Problems

Find the probability of exactly one call.

\[P(X = 1) = \frac{e^{-3} \cdot 3^1}{1!} = \frac{3e^{-3}}{1} ≈ 0.1494\]Find the probability of more than one call. Using the complement rule:

\[P(X > 1) = 1 - [P(X = 0) + P(X = 1)] = 1 - [e^{-3} + 3e^{-3}] ≈ 0.8009\]Find the probability of exactly 5 calls in the next two hours.

Since the rate is 3 calls per hour, over two hours we expect 2 × 3 = 6 calls on average. Let \(Y\) count the number of calls in the next two hours. Then \(Y \sim \text{Poisson}(\lambda = 6)\).

\[P(Y = 5) = \frac{e^{-6} \cdot 6^5}{5!} ≈ 0.1606\]Find the probability of 1 call in the next hour and 4 calls in the following hour.

Let \(X_1\) count the calls in the first hour and \(X_2\) the calls in the second hour. Both have an average rate of 3, and since the two intervals are non-overlapping, \(X_1\) and \(X_2\) are indepdendent. This allows us to use the special multiplication rule:

\[\begin{split}P(X_1=1 \cap X_2=4) &= P(X_1=1)P(X_2=4)\\ &=(0.8009)\left(\frac{e^{-3}3^4}{4!}\right) \\ &= (0.8009)(0.1680) \approx 0.1346\end{split}\]

5.7.5. When to Use Poisson vs. Binomial

Understanding when to use Poisson versus binomial distributions is crucial for proper statistical modeling.

Use Poisson When:

Counting events over continuous intervals (time, space, volume).

Events are rare relative to opportunity.

The number of potential events is very large or unlimited.

You know the average rate but not the total number of trials.

Use Binomial When:

The number of independent trials is fixed.

Each trial has exactly two outcomes.

Probability of success is constant across trials.

You’re counting successes among a known number of attempts.

Poisson as Binomial Approximation

Interestingly, Poisson can approximate binomial when \(n\) is large and \(p\) is small, with \(np \approx \lambda\). This connection highlights how these distributions relate to different aspects of the same underlying counting process.

5.7.6. Bringing It All Together

The Poisson distribution serves as a fundamental model for understanding random processes in fields ranging from telecommunications and quality control to epidemiology and reliability engineering. As we’ve seen, the key to successful application lies in recognizing when events satisfy the Poisson assumptions and correctly interpreting the rate parameter \(λ\) in context.

Key Takeaways 📝

The Poisson distribution models counts of rare events occurring over fixed intervals of time, space, or volume.

Three key properties that define Poisson processes are stationarity and proportional rates, independence of events, and unique event occurrences.

The PMF formula \(p_X(x) = \frac{e^{-\lambda}\lambda^x}{x!}\) applies to any non-negative interger \(x\).

The single parameter \(\lambda\) represents both the mean and variance: \(E[X] = \text{Var}(X) = λ\).

The shape of a Poisson distribution evolves from highly right-skewed to approximately symmetric as \(\lambda\) becomes larger.

5.7.7. Exercises

These exercises develop your skills in identifying Poisson processes, calculating Poisson probabilities, scaling the rate parameter for different intervals, and comparing Poisson to binomial distributions.

Exercise 1: Identifying Poisson Processes

For each scenario, determine whether a Poisson distribution is appropriate. If yes, identify the rate parameter \(\lambda\) and the interval. If no, explain which Poisson assumption is violated.

A network router experiences an average of 5 packet errors per hour. Let \(X\) = number of packet errors in the next hour.

A professor receives an average of 12 student emails per day. However, emails tend to cluster right before exams. Let \(X\) = number of emails on a random day.

Cars pass through a toll booth at an average rate of 20 per minute during rush hour. Let \(X\) = number of cars in the next minute.

A website receives an average of 100 visitors per hour. The site goes viral at noon, tripling traffic. Let \(X\) = number of visitors between 11 AM and 1 PM.

Typos occur in a manuscript at an average rate of 2.5 per page. Let \(X\) = number of typos on a randomly selected page.

Solution

Part (a): Packet errors — YES, Poisson

Stationary: Error rate is constant over time ✓

Independent: Errors occur independently ✓

Orderly: Errors happen one at a time ✓

\(X \sim \text{Poisson}(\lambda = 5)\) where the interval is 1 hour.

Part (b): Student emails — NO, not Poisson

Stationary: ❌ The rate is NOT constant — emails cluster before exams.

The non-constant rate violates stationarity. The Poisson model assumes the same average rate regardless of when we observe.

Part (c): Cars at toll booth — YES, Poisson

Stationary: Rate is constant during rush hour ✓

Independent: Car arrivals are independent ✓

Orderly: Cars pass one at a time ✓

\(X \sim \text{Poisson}(\lambda = 20)\) where the interval is 1 minute.

Part (d): Website visitors — NO, not Poisson

Stationary: ❌ The rate CHANGES at noon (triples).

Since the rate isn’t constant over the 2-hour interval, Poisson doesn’t apply. You could model each hour separately with different λ values.

Part (e): Typos per page — YES, Poisson

Stationary: Typo rate is constant per unit of text ✓

Independent: Typos occur independently ✓

Orderly: Typos happen at distinct locations ✓

\(X \sim \text{Poisson}(\lambda = 2.5)\) where the interval is 1 page.

Exercise 2: Basic Poisson Probability Calculations

A help desk receives an average of 4 support tickets per hour during business hours.

Let \(X\) = number of tickets received in the next hour.

Write the distribution of \(X\).

Calculate \(P(X = 3)\), the probability of exactly 3 tickets.

Calculate \(P(X = 0)\), the probability of no tickets.

Calculate \(P(X \leq 2)\), the probability of at most 2 tickets.

Calculate \(P(X > 5)\), the probability of more than 5 tickets.

Find \(E[X]\), \(\text{Var}(X)\), and \(\sigma_X\).

Solution

Part (a): Distribution

\(X \sim \text{Poisson}(\lambda = 4)\)

Part (b): P(X = 3)

Part (c): P(X = 0)

Part (d): P(X ≤ 2)

Part (e): P(X > 5)

Using complement: \(P(X > 5) = 1 - P(X \leq 5)\)

Part (f): E[X], Var(X), σ_X

For Poisson, \(E[X] = \text{Var}(X) = \lambda\):

Exercise 3: Scaling the Rate Parameter

A server logs an average of 6 security alerts per day (24 hours).

What is the average rate of alerts per hour?

Let \(X\) = number of alerts in a 4-hour period. Find \(E[X]\) and the distribution of \(X\).

Calculate \(P(X = 0)\) for the 4-hour period.

Let \(Y\) = number of alerts in a full week (7 days). Find \(E[Y]\) and \(\sigma_Y\).

A system administrator gets concerned if there are more than 2 alerts per hour. For a randomly selected hour, what is the probability of triggering concern?

Solution

Part (a): Rate per hour

Part (b): 4-hour period

Scale the rate: \(\lambda_{4\text{hr}} = 4 \times 0.25 = 1\)

Part (c): P(X = 0) for 4-hour period

There’s about a 36.8% chance of no alerts in any 4-hour window.

Part (d): Full week

Scale the rate: \(\lambda_{\text{week}} = 7 \times 6 = 42\)

Part (e): P(more than 2 per hour)

For one hour: \(H \sim \text{Poisson}(\lambda = 0.25)\)

There’s only about a 0.22% chance of triggering concern in any given hour.

Exercise 4: Independent Intervals

Customers arrive at a coffee shop at an average rate of 10 per hour.

What is the probability of exactly 5 customers arriving between 9:00-9:30 AM?

What is the probability of exactly 3 customers between 9:00-9:30 AM AND exactly 7 customers between 9:30-10:00 AM?

If \(X_1\) = customers in the first half hour and \(X_2\) = customers in the second half hour, explain why \(X_1\) and \(X_2\) are independent.

Verify: \(P(X_1 + X_2 = 10)\) using the distribution of the total.

Solution

Part (a): P(5 customers in 30 min)

Rate for 30 minutes: \(\lambda = 10 \times 0.5 = 5\)

Let \(X_1 \sim \text{Poisson}(5)\):

Part (b): P(3 in first half hour AND 7 in second half hour)

Both \(X_1\) and \(X_2\) have \(\lambda = 5\).

Since the intervals don’t overlap, \(X_1\) and \(X_2\) are independent.

Using the multiplication rule:

Part (c): Why independent?

By the Poisson process property, the number of events in non-overlapping intervals are independent. Since 9:00-9:30 and 9:30-10:00 don’t overlap, \(X_1\) and \(X_2\) are independent random variables.

Part (d): Verify P(X₁ + X₂ = 10)

The sum of independent Poisson RVs is also Poisson:

Exercise 5: Poisson Mean and Variance

Earthquakes of magnitude 4.0 or greater occur in a region at an average rate of 2 per month.

What is the expected number of such earthquakes in a year?

What is the standard deviation of the number of earthquakes per year?

Using the empirical rule approximation (since λ = 24 is fairly large), about 95% of years should have earthquake counts in what range?

A seismologist observes 35 earthquakes in one year. How many standard deviations is this from the expected value?

Based on your answer to (d), would 35 earthquakes be considered unusual?

Solution

Part (a): Expected per year

Part (b): Standard deviation per year

For Poisson: \(\text{Var}(X) = \lambda\), so:

Part (c): 95% range using empirical rule

For large λ, Poisson is approximately normal. Using \(\mu \pm 2\sigma\):

About 95% of years should have between 14.2 and 33.8 earthquakes, or roughly 14 to 34 earthquakes (rounding to integers).

Part (d): How many SDs is 35?

35 earthquakes is about 2.24 standard deviations above the mean.

Part (e): Is 35 unusual?

Using the empirical rule, values beyond 2 standard deviations are somewhat unusual (roughly 5% of the time). At 2.24 SDs, this is on the edge of what we’d consider unusual.

More precisely, for large Poisson (approximately normal), about 2.5% of values exceed μ + 2σ ≈ 33.8, so 35 earthquakes, while higher than typical, is not extremely rare — it would occur in roughly 1-2.5% of years.

Exercise 6: Two Poisson Scenarios with Bayes’ Rule

A call center operates under two conditions:

Condition A (probability 0.6): Calls arrive at rate \(\lambda_A = 3\) per hour

Condition B (probability 0.4): Calls arrive at rate \(\lambda_B = 8\) per hour

On any given hour, one condition applies (determined at random). Let \(X\) = number of calls received.

Find \(P(X = 5 \mid A)\) and \(P(X = 5 \mid B)\).

Use the Law of Total Probability to find \(P(X = 5)\).

Use Bayes’ Rule to find \(P(B \mid X = 5)\).

Find \(P(X \geq 6 \mid A)\) and \(P(X \geq 6 \mid B)\).

Find \(P(B \mid X \geq 6)\). Interpret this result.

Solution

Part (a): Conditional probabilities

Part (b): Law of Total Probability

Part (c): Bayes’ Rule for P(B | X = 5)

Part (d): P(X ≥ 6) under each condition

For Condition A (\(\lambda = 3\)):

For Condition B (\(\lambda = 8\)):

Part (e): Bayes’ Rule for P(B | X ≥ 6)

First, find \(P(X \geq 6)\):

Then apply Bayes’ Rule:

Interpretation: If we observe 6 or more calls, there’s an 86.5% chance we’re in Condition B. The prior probability of B was only 40%, but observing high call volume strongly suggests the high-rate condition.

5.7.8. Additional Practice Problems

True/False Questions (1 point each)

For a Poisson distribution, \(E[X] = \text{Var}(X) = \lambda\).

Ⓣ or Ⓕ

The Poisson distribution has a maximum possible value (like binomial’s n).

Ⓣ or Ⓕ

If events follow a Poisson process, events in non-overlapping intervals are independent.

Ⓣ or Ⓕ

Doubling the observation interval doubles both the expected value AND the variance.

Ⓣ or Ⓕ

Poisson distributions are always right-skewed regardless of λ.

Ⓣ or Ⓕ

The Poisson distribution can approximate the binomial when n is large and p is small.

Ⓣ or Ⓕ

Multiple Choice Questions (2 points each)

If \(X \sim \text{Poisson}(3)\), what is \(P(X = 0)\)?

Ⓐ 0

Ⓑ \(e^{-3}\)

Ⓒ \(3e^{-3}\)

Ⓓ 0.5

If \(X \sim \text{Poisson}(5)\), what is \(\sigma_X\)?

Ⓐ 5

Ⓑ \(\sqrt{5}\)

Ⓒ 25

Ⓓ 2.5

A store averages 8 customers per hour. What is λ for a 15-minute interval?

Ⓐ 2

Ⓑ 4

Ⓒ 8

Ⓓ 32

Which is NOT a requirement for a Poisson process?

Ⓐ Events occur independently

Ⓑ Events occur at a constant average rate

Ⓒ Events occur one at a time

Ⓓ The number of trials is fixed in advance

Answers to Practice Problems

True/False Answers:

True — This is the defining property of Poisson: the single parameter λ equals both the mean and variance.

False — Poisson has unbounded support: X ∈ {0, 1, 2, 3, …}. Unlike binomial (max = n), there’s no upper limit.

True — Independence of non-overlapping intervals is a key Poisson process property.

True — If λ₁ is the rate for time t, then λ₂ = 2λ₁ for time 2t. Both E[X] = λ and Var(X) = λ double.

False — For small λ, Poisson is right-skewed. But as λ increases (λ > 10 or so), it becomes approximately symmetric.

True — When n is large and p is small with np ≈ λ, Bin(n, p) ≈ Poisson(np).

Multiple Choice Answers:

Ⓑ — \(P(X=0) = \frac{e^{-3} \cdot 3^0}{0!} = e^{-3}\).

Ⓑ — For Poisson, Var(X) = λ = 5, so σ = √5.

Ⓐ — 15 minutes = 0.25 hours, so λ = 8 × 0.25 = 2.

Ⓓ — Poisson counts events over a continuous interval; there’s no “fixed number of trials.” That’s a binomial requirement. Options A, B, C are all Poisson requirements (independence, stationarity, orderliness).