Slides 📊

4.6. Independence of Events

In the previous sections, we explored how knowledge about an event influences the probabilities involving another event. But do events always influence each other? The answer is no, and we say that such events are idnependent. In this section, we’ll dive deeper into the concept of independence.

Road map 🧭

Understand what it means for events to be independent.

Distinguish between independence and mutually exclusive events.

See how independence simplifies probability calculations.

Explore different types of independence: pairwise vs. mutual.

Connect independence to the concepts covered in previous chapters.

4.6.1. Independence

We say two events \(A\) and \(B\) are independent if the occurrence of one event does not affect the probability of the other. Formally, events \(A\) and \(B\) are independent if

The first equation means that knowing \(B\) occurs provides no additional information about \(A\). Likewise, the second equation says that knowing \(A\) occurs does not provide any update on the probability of \(B\).

Since independence is a symmetric relationship between events, the two conditions are equivalent—one implies the other.

Additional Equivalent Expressions

Independence of \(A\) and \(B\) also implies the pairwise independence of \(A'\) and \(B\), \(A\) and \(B'\), and \(A'\) and \(B'\).

Special Multiplication Rule

When two events are independent, the general multiplication rule simplifies to:

Why Is This True?

The general multiplication rule says that for any pair of events \(A\) and \(B\),

Independence of \(A\) and \(B\) implies \(P(A|B) = P(A)\), which allows us to replace \(P(A|B)\) with \(P(A)\) in the general addition rule.

‼️ Avoid the common mistake ‼️

This is a special-case rule which can only be used when the independence of A and B has been mathematically shown. When unsure, always begin with the general version (Revisit Section 4.3).

4.6.2. Independence vs. Mutual Exclusitivity

It’s important to distinguish between independence and mutual exclusivity, as these concepts are often confused but are fundamentally different. Recall their definitions:

Mutually exclusive (or disjoint) events cannot occur simultaneously. Their intersection is empty.

Independent events provide no information about each other. Knowing that one occurs does not change the probability of the other.

These concepts are in fact incompatible in general. Let us see why through two events \(A\) and \(B\). For generality, assume that their probabilities are both non-zero.

If \(A\) and \(B\) are mutually exclusive, then they cannot be independent.

If \(A\) and \(B\) are mutually exclusive, then \(P(A \cap B) = 0\). Using the conditional probability formula,

\[P(A|B) = \frac{P(A \cap B)}{P(B)} = \frac{0}{P(B)} = 0.\]But \(P(A) > 0\), so \(P(A|B) = 0 \neq P(A)\). This means \(A\) and \(B\) are not independent.

If \(A\) and \(B\) independent, then they cannot be mutually exclusive.

If \(A\) and \(B\) are independent, then

\[P(A \cap B) = P(A)P(B) > 0\]since both \(P(A)\) and \(P(B)\) are non-zero. This means that \(A\) and \(B\) have a non-empty intersection. In other words, they are not mutually exclusive.

4.6.3. Pairwise Independence vs. Mutual Independence

When dealing with more than two events, we can define different levels of independence.

Pairwise Independence

A collection of events \(\{A_1, A_2, \cdots, A_n\}\) is pairwise independent if all pairs of events are independent.

For example, four events \(\{A_1, A_2, A_3, A_4\}\) are pairwise independent if

for all pairs.

Mutual Independence

Mutual indepdendence of events is a stronger condition where the special multiplication rule holds for all combinations of events, not just pairs. For four events to be mutually independent, they must be pairwise independent and satisfy

for all triplets, and

There exist cases where events are pairwise independent but not mutually independent.

Example💡: Circuit Reliability

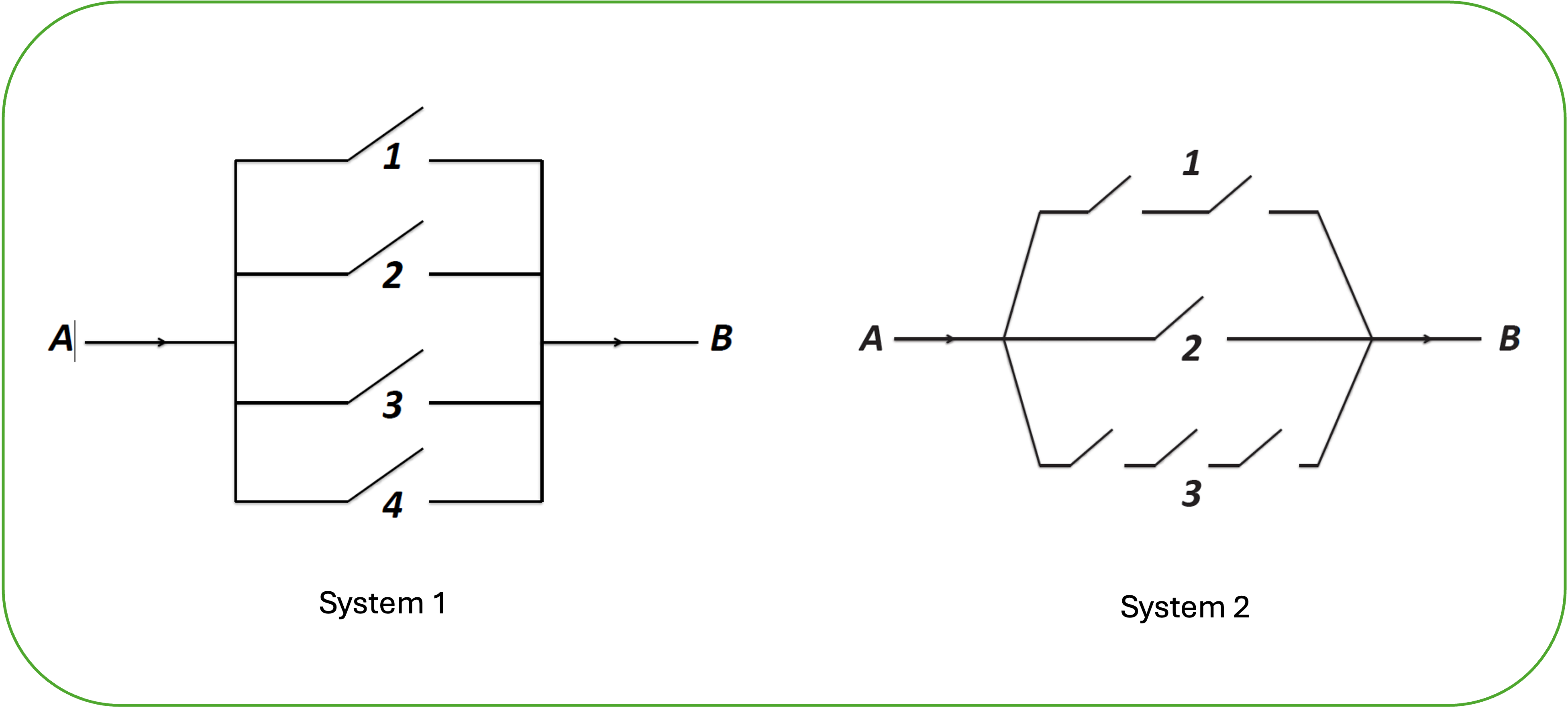

Consider two electrical systems with different circuit configurations.

Fig. 4.22 Diagram of two circuit systems

System 1: Four parallel paths (labeled 1, 2, 3, 4) connect points A and B.

System 2: Three paths (labeled 1, 2, 3) connect points A and B, but with more complex connectivity.

Each path contains mutually independent switches that are each activated with probability 0.3. The system functions if current can flow from point A to point B. We want to calculate the probability that each system will function.

System 1

Let \(L_i\) denote the event that the \(i\) th line is on, for each \(i=1,2,3,4\).

We are given that \(P(L_i)=0.3\) for all \(i\). This also implies \(P(L_i')=0.7\) for all \(i\).

Let \(F_1^+\) denote the event that system 1 is functioning.

For the system to function, at least one path must be on. In other words, Line 1 or Line 2 or Line 3 or Line 4 must be on. This gives:

Using the complement rule and De Morgan’s law:

Since the lines operate independently, we can use the special multiplication rule:

System 2

Let \(L_i\) now denote the event that the \(i\) th line is on in the second system, for each \(i=1,2,3\).

Let \(F_2^+\) denote the event that system 1 is functioning.

The first steps are identical to the first system.

Now we need to calculate the probability that each line is not functioning.

\(P(L_1') = 1 - P(\text{both switches are on}) = 1-(0.3)^2 = 0.91\)

\(P(L_2') = 1 - 0.3 = 0.7\)

\(P(L_3') = 1 - P(\text{all three switches are on}) = 1-(0.3)^3 = 0.973\)

Putting it all together,

The difference in reliability between the two systems (76% vs. 38%) highlights how parallel paths increase reliability compared to series connections.

4.6.4. Bringing It All Together

Key Takeaways 📝

Independence means that knowing one event occurs doesn’t change the probability of another event.

Independence allows us to use the special multiplication rule: \(P(A \cap B) = P(A) P(B)\).

Mutually exclusivity and independence generally do not occur simultaneously.

Pairwise independence means all pairs of events are independent, while mutual independence requires that all combinations of events follow the special multiplication rule.

Independence often leads to powerful simplifications in computation, but its should use should always follow mathematical justification.

4.6.5. Exercises

These exercises develop your understanding of independence, how to test for it mathematically, and how it simplifies probability calculations.

Exercise 1: Testing for Independence

A software company tracks bug reports by severity and by the day of the week they are submitted. Data from 500 bug reports shows:

Critical |

Non-Critical |

Total |

|

|---|---|---|---|

Weekday |

80 |

320 |

400 |

Weekend |

20 |

80 |

100 |

Total |

100 |

400 |

500 |

Let \(C\) = “bug is critical” and \(W\) = “bug submitted on a weekday.”

Calculate \(P(C)\), \(P(W)\), and \(P(C \cap W)\).

If C and W were independent, what would \(P(C \cap W)\) equal?

Are events C and W independent? Justify mathematically.

Calculate \(P(C|W)\) and \(P(C|W')\). What do these tell you about the relationship between bug severity and submission day?

Solution

Part (a): Basic Probabilities

\(P(C) = \frac{100}{500} = 0.20\)

\(P(W) = \frac{400}{500} = 0.80\)

\(P(C \cap W) = \frac{80}{500} = 0.16\)

Part (b): If Independent

If C and W were independent:

Part (c): Independence Test

Compare the actual \(P(C \cap W)\) with the product \(P(C) \cdot P(W)\):

Actual: \(P(C \cap W) = 0.16\)

If independent: \(P(C) \cdot P(W) = 0.16\)

Since \(P(C \cap W) = P(C) \cdot P(W)\), yes, C and W are independent.

Part (d): Conditional Probabilities

Both equal \(P(C) = 0.20\), confirming independence.

Interpretation: The severity of bugs is unrelated to whether they’re submitted on weekdays or weekends. Critical bugs make up 20% of reports regardless of when they’re submitted.

Exercise 2: Independence vs Mutual Exclusivity

Consider rolling a fair six-sided die once. Define the following events:

\(A\) = “roll is even” = {2, 4, 6}

\(B\) = “roll is greater than 4” = {5, 6}

\(C\) = “roll is less than 3” = {1, 2}

For each pair of events below, determine whether they are:

mutually exclusive, and

independent.

Justify each answer mathematically.

Events A and B

Events A and C

Events B and C

Solution

First, calculate individual probabilities:

\(P(A) = 3/6 = 1/2\)

\(P(B) = 2/6 = 1/3\)

\(P(C) = 2/6 = 1/3\)

Part (a): Events A and B

Mutual Exclusivity:

\(A \cap B = \{6\} \neq \emptyset\)

Not mutually exclusive — rolling a 6 satisfies both events.

Independence:

\(P(A \cap B) = 1/6\)

\(P(A) \cdot P(B) = (1/2)(1/3) = 1/6\)

Since \(P(A \cap B) = P(A) \cdot P(B)\), A and B are independent.

Part (b): Events A and C

Mutual Exclusivity:

\(A \cap C = \{2\} \neq \emptyset\)

Not mutually exclusive — rolling a 2 satisfies both events.

Independence:

\(P(A \cap C) = 1/6\)

\(P(A) \cdot P(C) = (1/2)(1/3) = 1/6\)

Since \(P(A \cap C) = P(A) \cdot P(C)\), A and C are independent.

Part (c): Events B and C

Mutual Exclusivity:

\(B \cap C = \emptyset\) (no number is both > 4 and < 3)

B and C are mutually exclusive.

Independence:

\(P(B \cap C) = 0\)

\(P(B) \cdot P(C) = (1/3)(1/3) = 1/9 \neq 0\)

Since \(P(B \cap C) \neq P(B) \cdot P(C)\), B and C are NOT independent.

Key Insight: Events B and C illustrate that mutually exclusive events with non-zero probabilities cannot be independent. Knowing one occurs tells you the other definitely didn’t occur.

Exercise 3: System Reliability — Series Configuration

A data pipeline has three processing stages that operate independently:

Stage 1 (Data Ingestion): 98% reliability

Stage 2 (Data Transformation): 95% reliability

Stage 3 (Data Storage): 99% reliability

The pipeline functions only if all three stages work (series configuration).

What is the probability that the entire pipeline functions?

Which stage contributes most to pipeline failures? Justify your answer.

If the company wants the overall pipeline reliability to be at least 95%, and they can only improve one stage, which stage should they focus on and what reliability would that stage need?

If they add a fourth stage with 97% reliability, what is the new pipeline reliability?

Solution

Let \(S_1, S_2, S_3\) denote the events that stages 1, 2, 3 work, respectively.

Given: \(P(S_1) = 0.98\), \(P(S_2) = 0.95\), \(P(S_3) = 0.99\)

Part (a): Pipeline Reliability

For a series system, all components must work. Since stages operate independently:

The pipeline has 92.16% reliability.

Part (b): Identifying the Bottleneck

The stage with the lowest reliability contributes most to failures.

Stage 1: 2% failure rate

Stage 2: 5% failure rate ← Highest failure rate

Stage 3: 1% failure rate

Stage 2 (Data Transformation) is the bottleneck with a 5% failure rate.

Part (c): Achieving 95% Overall Reliability

Current: \((0.98)(0.95)(0.99) = 0.9216\)

Target: 0.95

Focus on Stage 2 (the bottleneck). Let \(p\) be the new Stage 2 reliability.

Stage 2 needs at least 97.92% reliability (up from 95%).

Part (d): Adding a Fourth Stage

Let \(P(S_4) = 0.97\)

Adding a fourth stage reduces overall reliability to 89.4%. In series systems, more components generally decrease reliability.

Exercise 4: System Reliability — Parallel Configuration

A web application uses redundant servers for high availability. Three servers are configured in parallel — the system functions if at least one server is operational.

Each server has a 90% probability of being operational (servers fail independently).

What is the probability that the system functions?

What is the probability that exactly two servers are operational?

If the company adds a fourth server (also 90% reliable), what is the new system reliability?

How many 90%-reliable servers would be needed to achieve 99.99% system reliability?

Solution

Let \(S_i\) = “server \(i\) is operational” with \(P(S_i) = 0.90\) and \(P(S_i') = 0.10\).

Part (a): System Reliability (At Least One Server)

Use the complement: P(system works) = 1 − P(all servers fail)

By independence:

System reliability: 99.9%

Part (b): Exactly Two Servers Operational

“Exactly two” means one fails and two work. There are \(\binom{3}{2} = 3\) ways to choose which two work.

24.3% probability of exactly two servers operational.

Part (c): Adding a Fourth Server

With four servers, reliability increases to 99.99%.

Part (d): Achieving 99.99% Reliability

We need: \(1 - (0.10)^n \geq 0.9999\)

Four servers are needed for 99.99% reliability.

Key Insight: Parallel configurations dramatically improve reliability. Three 90%-reliable servers give 99.9% system reliability, while three such servers in series would give only \((0.90)^3 = 72.9\%\).

Exercise 5: Pairwise vs Mutual Independence

A fair coin is flipped twice. Define the following events:

\(A\) = “first flip is heads”

\(B\) = “second flip is heads”

\(C\) = “both flips show the same result” (both heads or both tails)

List the sample space and identify which outcomes belong to each event.

Calculate \(P(A)\), \(P(B)\), \(P(C)\), \(P(A \cap B)\), \(P(A \cap C)\), and \(P(B \cap C)\).

Check whether each pair of events is independent:

Are A and B independent?

Are A and C independent?

Are B and C independent?

Calculate \(P(A \cap B \cap C)\). Is it equal to \(P(A) \cdot P(B) \cdot P(C)\)?

Are A, B, and C mutually independent? Explain.

Solution

Part (a): Sample Space

\(\Omega = \{HH, HT, TH, TT\}\), each with probability 1/4.

\(A = \{HH, HT\}\) — first flip is H

\(B = \{HH, TH\}\) — second flip is H

\(C = \{HH, TT\}\) — both same

Part (b): Probabilities

\(P(A) = 2/4 = 1/2\)

\(P(B) = 2/4 = 1/2\)

\(P(C) = 2/4 = 1/2\)

\(P(A \cap B) = P(\{HH\}) = 1/4\)

\(P(A \cap C) = P(\{HH\}) = 1/4\)

\(P(B \cap C) = P(\{HH\}) = 1/4\)

Part (c): Pairwise Independence

A and B:

\(P(A \cap B) = 1/4\)

\(P(A) \cdot P(B) = (1/2)(1/2) = 1/4\) ✓

A and B are independent.

A and C:

\(P(A \cap C) = 1/4\)

\(P(A) \cdot P(C) = (1/2)(1/2) = 1/4\) ✓

A and C are independent.

B and C:

\(P(B \cap C) = 1/4\)

\(P(B) \cdot P(C) = (1/2)(1/2) = 1/4\) ✓

B and C are independent.

All pairs are independent — the events are pairwise independent.

Part (d): Triple Intersection

\(A \cap B \cap C = \{HH\}\) (first H, second H, both same)

\(P(A \cap B \cap C) = 1/4\)

\(P(A) \cdot P(B) \cdot P(C) = (1/2)(1/2)(1/2) = 1/8\)

\(P(A \cap B \cap C) = 1/4 \neq 1/8 = P(A) \cdot P(B) \cdot P(C)\)

Part (e): Mutual Independence

No, A, B, and C are NOT mutually independent.

Although all pairs are independent (pairwise independence), the triple product rule fails. This is a classic example showing that pairwise independence does not imply mutual independence.

Intuition: If you know A occurred (first flip H) AND B occurred (second flip H), then C must occur (both same). So knowing A and B together completely determines C, even though knowing A alone or B alone doesn’t help predict C.

Exercise 6: Independent Events and Complements

Network packets are transmitted through two independent routers. Router 1 successfully forwards a packet with probability 0.95, and Router 2 successfully forwards with probability 0.92.

Let \(R_1\) = “Router 1 succeeds” and \(R_2\) = “Router 2 succeeds.”

Verify that \(R_1\) and \(R_2\) being independent implies \(R_1'\) and \(R_2\) are also independent.

Calculate the probability that both routers fail.

Calculate the probability that exactly one router succeeds.

A packet is successfully delivered if both routers succeed (series). What is P(delivered)?

If the routers were configured in parallel (packet delivered if at least one succeeds), what would P(delivered) be?

Solution

Given: \(P(R_1) = 0.95\), \(P(R_2) = 0.92\), and \(R_1 \perp R_2\) (independent).

Part (a): Complement Independence

If \(R_1\) and \(R_2\) are independent, we need to show \(P(R_1' \cap R_2) = P(R_1') \cdot P(R_2)\).

This confirms :math:`R_1’` and :math:`R_2` are independent ✓

Similarly, \(R_1\) and \(R_2'\), and \(R_1'\) and \(R_2'\) are all independent.

Part (b): Both Routers Fail

By independence of complements:

0.4% probability both fail.

Part (c): Exactly One Succeeds

“Exactly one” = (\(R_1\) and not \(R_2\)) OR (not \(R_1\) and \(R_2\))

12.2% probability exactly one succeeds.

Part (d): Series Configuration (Both Must Succeed)

87.4% delivery rate in series.

Part (e): Parallel Configuration (At Least One Succeeds)

99.6% delivery rate in parallel.

Comparison: Parallel configuration (99.6%) is much more reliable than series (87.4%) for the same components.

4.6.6. Additional Practice Problems

True/False Questions (1 point each)

If events A and B are independent, then \(P(A \cap B) = P(A) \cdot P(B)\).

Ⓣ or Ⓕ

If events A and B are mutually exclusive with \(P(A) > 0\) and \(P(B) > 0\), then they are independent.

Ⓣ or Ⓕ

If A and B are independent, then A and B’ are also independent.

Ⓣ or Ⓕ

Pairwise independence of three events implies mutual independence.

Ⓣ or Ⓕ

In a series system, adding more independent components always decreases system reliability.

Ⓣ or Ⓕ

If \(P(A|B) = P(A)\), then A and B are independent.

Ⓣ or Ⓕ

Multiple Choice Questions (2 points each)

Two events A and B have \(P(A) = 0.4\), \(P(B) = 0.5\), and \(P(A \cap B) = 0.2\). Are A and B independent?

Ⓐ Yes, because \(P(A \cap B) = P(A) \cdot P(B)\)

Ⓑ No, because \(P(A \cap B) \neq P(A) \cdot P(B)\)

Ⓒ Yes, because they are not mutually exclusive

Ⓓ Cannot be determined

Three independent components each have 80% reliability. What is the reliability of a system where all three must work (series)?

Ⓐ 0.512

Ⓑ 0.800

Ⓒ 0.992

Ⓓ 2.400

For the same three components in parallel (at least one must work), the system reliability is:

Ⓐ 0.512

Ⓑ 0.800

Ⓒ 0.992

Ⓓ 0.008

If P(A) = 0.3 and P(B) = 0.4, and A and B are mutually exclusive, what is \(P(A \cap B)\)?

Ⓐ 0.00

Ⓑ 0.12

Ⓒ 0.70

Ⓓ Cannot be determined without more information

Answers to Practice Problems

True/False Answers:

True — This is the special multiplication rule, which defines independence.

False — Mutually exclusive events with non-zero probabilities are NEVER independent. If A occurs, B definitely doesn’t (P(B|A) = 0 ≠ P(B)).

True — Independence of A and B implies independence of A and B’, B and A’, and A’ and B’.

False — Pairwise independence does NOT imply mutual independence. The coin flip example (Exercise 5) shows three pairwise independent events that are not mutually independent.

True — In series, P(system) = P(C₁) × P(C₂) × … Each additional component (with P < 1) multiplies by a value less than 1, decreasing reliability.

True — This is an equivalent definition of independence. P(A|B) = P(A) means knowing B doesn’t change the probability of A.

Multiple Choice Answers:

Ⓐ — Check: P(A) × P(B) = 0.4 × 0.5 = 0.2 = P(A ∩ B). Since the products match, A and B are independent.

Ⓐ — Series reliability = (0.8)³ = 0.512

Ⓒ — Parallel reliability = 1 − P(all fail) = 1 − (0.2)³ = 1 − 0.008 = 0.992

Ⓐ — Mutually exclusive events have no overlap, so P(A ∩ B) = 0 by definition.