Slides 📊

5.6. The Binomial Distribution

Certain patterns in probability occur so frequently that they deserve special attention. When we flip coins repeatedly, test multiple products for defects, or survey people about their preferences, we encounter the same underlying structure: a fixed number of independent trials, each with two possible outcomes. The binomial distribution captures this common scenario, providing us with ready-made formulas that eliminate the need to build probability models from scratch every time.

Road Map 🧭

Identify when to use the binomial distribution through the BInS criteria.

Master the binomial probability mass function and its parameters.

Calculate expected value and variance using distribution properties.

Apply binomial models to real-world counting problems.

Connect binomial random variables to sums of independent Bernoulli trials.

5.6.1. Binomial Experiments

The binomial distribution emerges naturally when we perform the same basic experiment multiple times under controlled conditions. Think about flipping a coin ten times and counting heads, or testing twenty manufactured items for defects. These scenarios share a common structure that statisticians have studied extensively.

The BInS Criteria

A binomial experiment must satisfy four key properties, which we can remember using the acronym BInS:

The BInS checklist |

||

|---|---|---|

Letter |

Word |

Meaning |

B |

Binary |

Each trial has exactly two possible outcomes (success & failure). |

I |

Independent |

Outcomes of different trials don’t influence one another. |

n |

Fixed (n) trials |

The number of trials is set in advance. |

S |

Same (p) |

The probability of success is identical for every trial. |

Examples💡: Identifying a Binomial Experiment throuhg BInS

For each given scenario, determine whether the experiment fits the BInS criteria.

Example 1: Rolling a Die

Suppose we roll a fair four-sided die five times and observe whether each outcome is a 1 or not.

Binary: Each roll either shows a 1 (success) or doesn’t (failure). ✓

Independent: Each roll doesn’t affect subsequent rolls. ✓

n-trials: We perform exactly 5 rolls. ✓

Success: The probability of rolling a 1 remains 1/4 on every trial. ✓

This satisfies all BInS criteria, so it’s a binomial experiment.

Example 2: Drug Trial

Twenty patients with the same condition receive either a drug or placebo, and we measure whether the treatment is effective.

Binary: This seems binary (effective/not effective), but there’s more complexity since patients are given different types of of treatment. 🤔

Independent: Patient outcomes are independent. ✓

n-trials: We have 20 patients. ✓

Success: The success probabilities may or may not be the same for different patients, depending on the effectiveness of the drug. ❌

The experiment is not a binomial experiment.

Example 3: Quality Control

We randomly sample 15 products from different assembly lines in a factory and classify each as acceptable or not acceptable.

Binary: Each product is either acceptable or not. ✓

Independent: Random sampling makes outcomes independent. ✓

n-trials: We test exactly 15 products. ✓

Success: Different machines may have different success rates. ❌

This is not a binomial experiment.

Example 4: A different drug trial

A pharmaceutical company has developed a new medication for a common illness. In a clinical trial, 10 randomly selected patients receive the new medication to see if they recover within a fixed amount of time.

Binary: Each patient either recovers or doesn’t. ✓

Independent: One patient’s recovery doesn’t affect another’s. ✓

n-trials: We observe exactly 10 patients. ✓

Success: Recovery probability is equal for each patient since they are given the same medication. ✓

This is a binomial experiment.

5.6.2. The Binomial Distribution

When an experiment satisfies the BInS criteria, we can model the number of successes using a binomial distribution.

Definition

A binomial random variable \(X\) maps each outcome in a binomial experiment to the number of successes in \(n\) trials. We write this as:

where

\(n\) a positive integer representing the number of trials and

\(p \in [0,1]\) is the probability of success on each trial.

Parameters

The set of quantities that specify a complete PMF in a known family of distributions are called parameters. \(n\) and \(p\) are the parameters of the binomial distribution.

Probability Mass Function

The binomial PMF gives us the probability of exactly \(x\) successes in \(n\) trials:

for \(x \in \text{supp}(X) = \{0, 1, 2, ..., n\}\).

This formula has three components:

Combinations:

\(\binom{n}{x} = \frac{n!}{x!(n-x)!}\) counts the number of ways to arrange \(x\) successes among \(n\) trials.

Success probability:

\(p^x\) accounts for the probability of getting exactly \(x\) successes.

Failure probability:

\((1-p)^{n-x}\) accounts for the probability of getting \((n-x)\) failures.

The beauty of this formula lies in its generality. Once we know \(n\) and \(p\), we can calculate the probability for any number of successes without listing all possible outcomes.

Validating the binomial PMF

It is clear that for each \(x \in \text{supp}(X)\), \(p_X(x)\) gives a non-negative value, since the three components which make up its formula are non-negative. By verifying these terms sum to 1, we will also be able to argue that each \(p_X(x)\) is at most 1.

We compute the sum of all \(p_X(x)\) terms using the binomial theorem:

So, the binomial PMF satsifies both conditions for validity.

5.6.3. Expected Value and Variance: Building from Bernoulli Trials

Rather than deriving the expected value and variance from the PMF directly, we can use a clever approach that connects the binomial distribution to simpler building blocks.

Bernoulli Random Variables

A Bernoulli random variable \(B\) represents the outcome of a single trial in a binomial experiment:

For this simple random variable:

Connecting Bernoulli to Binomial

A binomial random variable is simply the sum of \(n\) independent Bernoulli trials:

where each \(B_i\) represents the \(i\)-th trial.

Using the linearity of expectation:

Since the trials are independent, we can also add their variances:

Summary of Binomial Distribution Properties

For \(X \sim Bin(n, p)\):

These formulas align with our intuition:

If we perform \(n\) trials with probability \(p\) of success each time, we expect about \(np\) successes on average.

The variance reflects that we get maximum uncertainty when \(p = 0.5\) (each trial is equally likely to succeed or fail) and minimum uncertainty when \(p\) is close to 0 or 1.

5.6.4. Visualizing Binomial Distributions

The shape of a binomial distribution depends heavily on its parameters. Let’s examine how changing \(p\) affects the distribution when \(n = 10\):

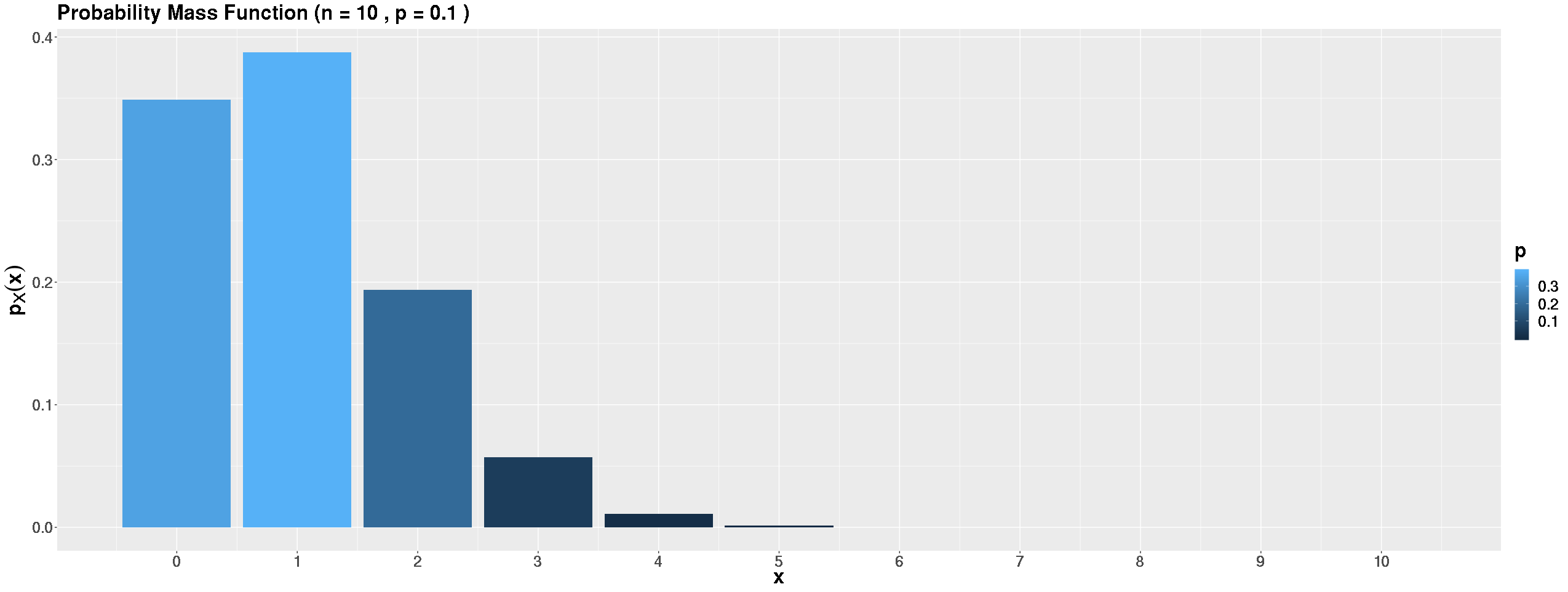

Low Success Probability (\(p = 0.1\))

Fig. 5.10 \(p=0.1\)

When success is rare, most probability concentrates near zero. We expect 0.1 successes on average, so getting 0 or 1 success is most likely, with higher counts becoming increasingly rare.

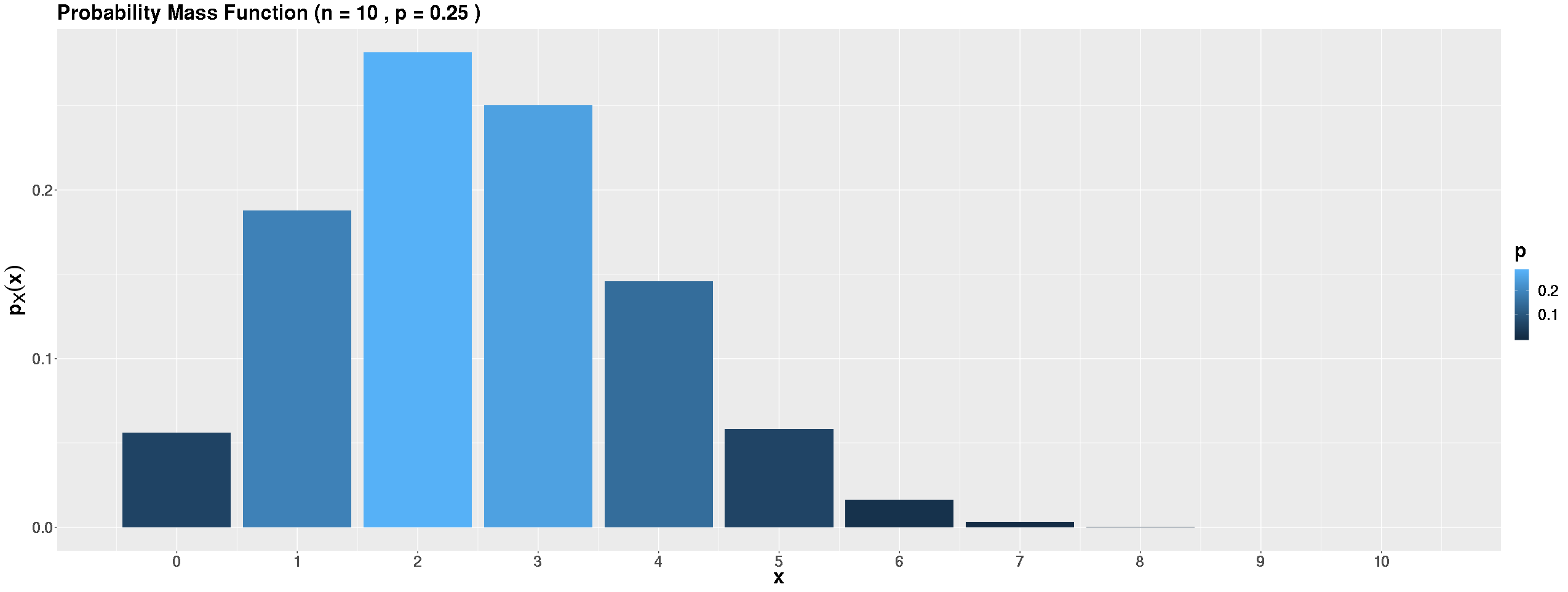

Moderate Success Probability (\(p = 0.25\))

Fig. 5.11 \(p=0.25\)

As \(p\) increases, the distribution shifts right but remains skewed. We expect 2.5 successes on average, with reasonable probability spread across several values.

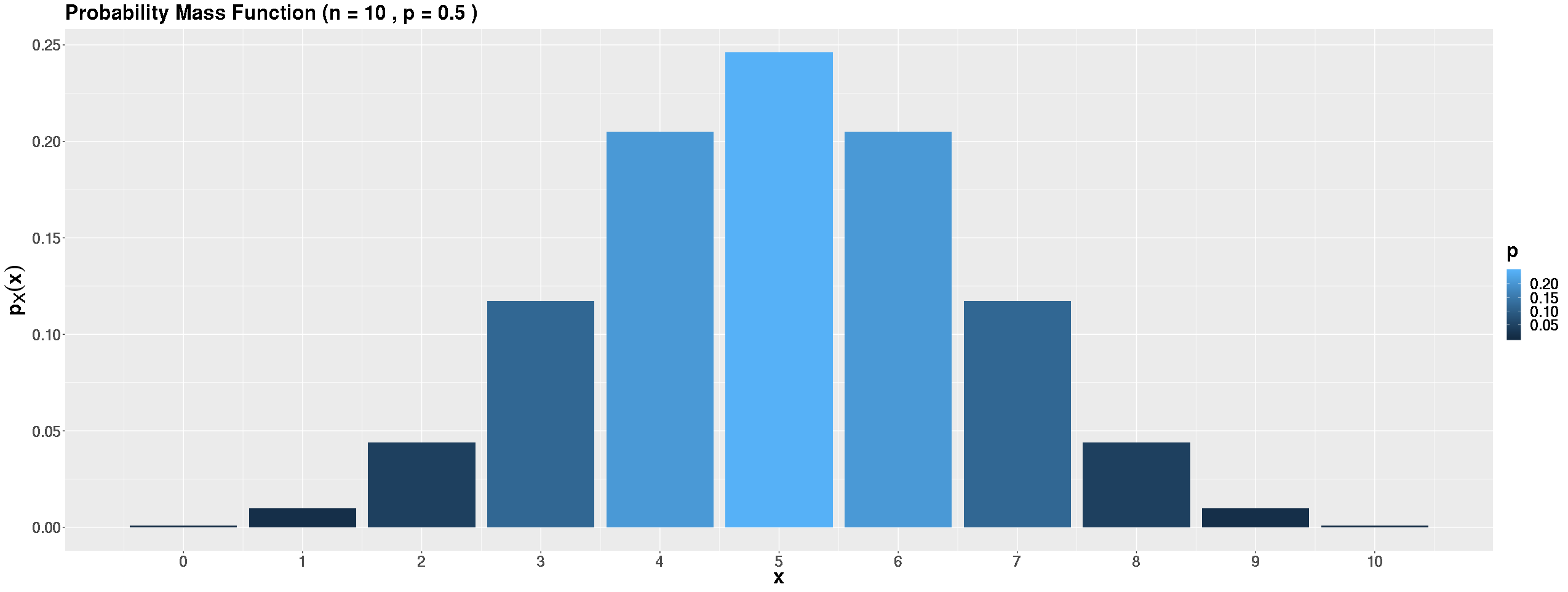

Equal Success and Failure (\(p = 0.5\))

Fig. 5.12 \(p=0.5\)

When success and failure are equally likely, the distribution becomes symmetric around its mean of 5. This creates a bell-shaped pattern.

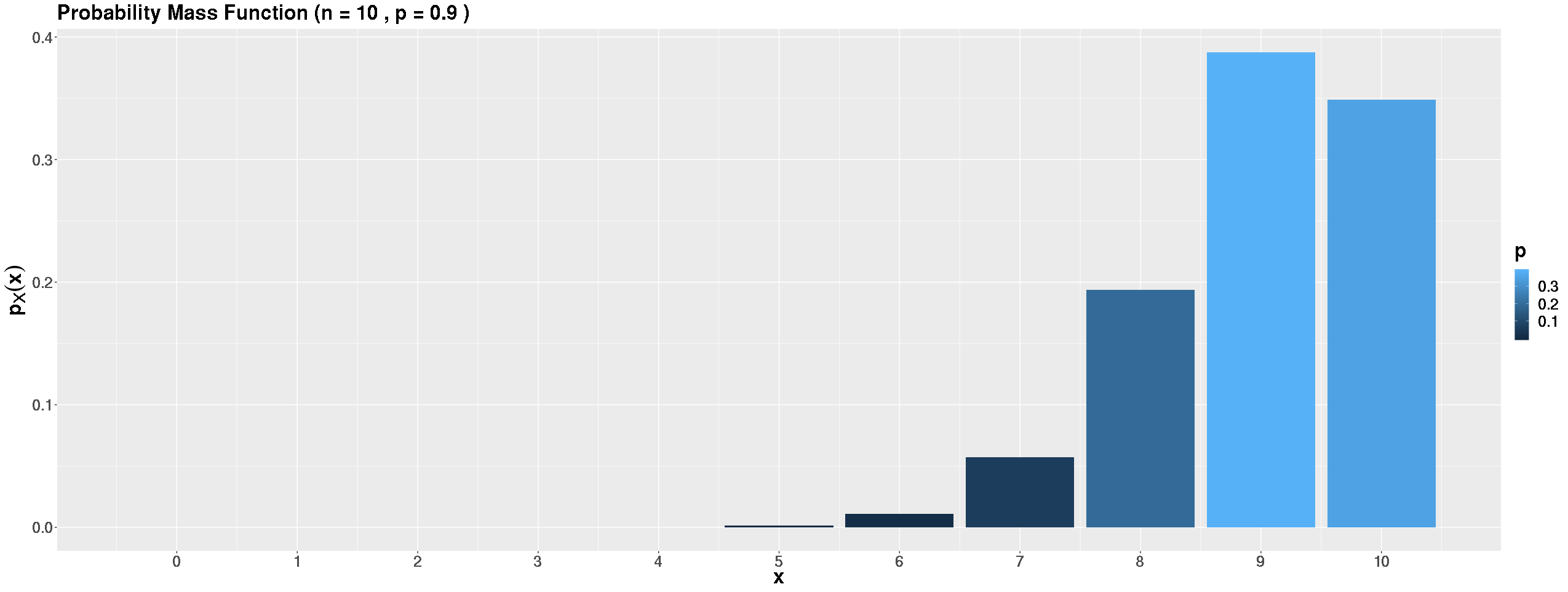

High Success Probability (\(p = 0.9\))

Fig. 5.13 p=0.9

When success is nearly certain, most probability concentrates near the maximum. We expect 9.9 successes, so the event of getting 9 or 10 successes dominates the distribution.

Example💡: Evaluating a Medical Trial

A pharmaceutical company has developed a new medication for a common illness. Historical data shows that 30% of people with this illness recover naturally without treatment. In a clinical trial, 10 randomly selected patients received the new medication, 9 of which recovered. Is this strong evidence that the medication is effective, or could this result reasonably occur by chance alone?

Step 1: Confirm BInS Criteria

We confirmed that this is a binomial experiment through Case 4 of the first example in this section.

Step 2: Is the result realistic in nature?

If the number of recovered patients followed the natural recovery rate, we would have

What is the probability that we see a comparative or better recovery rate in nature than the current experiment? In other words, what is \(P(X\geq 9)\)?

Step 3: Interpreting the results

The probability of seeing 9 or more recoveries by chance alone is only about 0.000144, or roughly 1 in 7,000. This extremely small probability suggests that observing 9 recoveries is highly unlikely if the medication had no effect.

This analysis provides strong statistical evidence that the medication is effective, though we’d want to see results from larger, more carefully controlled studies before drawing definitive conclusions.

5.6.5. Bringin It All Together

Key Takeaways 📝

The binomial distribution models the number of successes in a set of independent and identical trials.

Use the BInS criteria to identify binomial situations: Binary outcomes, Independent trials, n:math:n fixed trials, and constant Success probability.

The binomial PMF \(p_X(x) = \binom{n}{x} p^x (1-p)^{n-x}\) combines combinatorics with basic probability principles.

Binomial random variables have simple rxpected value and variance formulas: \(E[X] = np\) and \(Var(X) = np(1-p)\), derived by viewing binomial variables as sums of Bernoulli trials.

Binomial distributions are symmetric when p = 0.5 and increasingly skewed as p approaches 0 or 1.

5.6.6. Exercises

These exercises develop your skills in identifying binomial experiments using the BInS criteria, calculating binomial probabilities, and applying expected value and variance formulas.

Exercise 1: Identifying Binomial Experiments (BInS Criteria)

For each scenario, determine whether it represents a binomial experiment. If yes, identify \(n\) and \(p\). If no, explain which BInS criterion is violated.

A software company deploys code to 50 servers. Each server independently has a 2% chance of experiencing a deployment error. Let \(X\) = number of servers with errors.

A network engineer monitors packets until 10 packets are lost. Let \(X\) = number of packets transmitted before the 10th loss.

A data scientist runs 20 independent A/B tests. Each test has an 80% chance of detecting a true effect when one exists. Let \(X\) = number of tests that detect the effect.

Cards are drawn one at a time from a standard 52-card deck without replacement until 5 cards are drawn. Let \(X\) = number of hearts drawn.

A quality engineer inspects 30 circuit boards from a production batch. Each board independently has a 3% defect rate. Let \(X\) = number of defective boards.

A basketball player takes free throws until she misses. Her free-throw percentage is 85%. Let \(X\) = number of shots taken.

Solution

Part (a): Server deployment — YES, Binomial

Binary: Each server either has an error or doesn’t ✓

Independent: Servers fail independently ✓

n fixed: 50 servers ✓

Same p: Each server has 2% error rate ✓

\(X \sim \text{Bin}(n=50, p=0.02)\)

Part (b): Packets until 10 losses — NO, not Binomial

n fixed: ❌ The number of trials is not fixed — we continue until we observe 10 losses.

This is actually a negative binomial experiment (covered in more advanced courses).

Part (c): A/B tests — YES, Binomial

Binary: Each test either detects the effect or doesn’t ✓

Independent: Tests are run independently ✓

n fixed: 20 tests ✓

Same p: Each test has 80% detection rate ✓

\(X \sim \text{Bin}(n=20, p=0.80)\)

Part (d): Drawing cards without replacement — NO, not Binomial

I**ndependent: ❌ Drawing without replacement means trials are **dependent. After drawing a heart, the probability of drawing another heart changes.

With replacement, this would be binomial. Without replacement, the exact distribution is hypergeometric.

Part (e): Circuit board inspection — YES, Binomial

Binary: Each board is either defective or not ✓

Independent: Board defects are independent ✓

n fixed: 30 boards ✓

Same p: Each board has 3% defect rate ✓

\(X \sim \text{Bin}(n=30, p=0.03)\)

Part (f): Free throws until miss — NO, not Binomial

n fixed: ❌ The number of trials is not fixed — we continue until a miss occurs.

This is a geometric experiment (Chapter 5.7 topic).

Exercise 2: Basic Binomial Probability Calculations

A cybersecurity firm finds that 15% of phishing emails successfully trick users into clicking malicious links. In a simulated attack, 12 phishing emails are sent to employees.

Let \(X\) = number of employees who click the malicious link.

Verify that \(X\) follows a binomial distribution. State the parameters.

Calculate \(P(X = 2)\), the probability that exactly 2 employees click.

Calculate \(P(X \leq 1)\), the probability that at most 1 employee clicks.

Calculate \(P(X \geq 3)\), the probability that 3 or more employees click.

Find \(E[X]\) and \(\sigma_X\).

Solution

Part (a): Verify binomial and state parameters

Binary: Each employee either clicks or doesn’t ✓

Independent: Employee decisions are independent ✓

n fixed: 12 emails sent ✓

Same p: Each has 15% click rate ✓

\(X \sim \text{Bin}(n=12, p=0.15)\)

Part (b): P(X = 2)

Part (c): P(X ≤ 1)

Part (d): P(X ≥ 3)

Using the complement:

Part (e): E[X] and σ_X

Exercise 3: Bernoulli to Binomial Connection

A sensor system consists of 5 independent sensors. Each sensor has a 90% probability of correctly detecting an event.

Let \(B_i\) be the Bernoulli random variable for sensor \(i\):

Let \(X = B_1 + B_2 + B_3 + B_4 + B_5\) = total number of sensors that detect the event.

Find \(E[B_i]\) and \(\text{Var}(B_i)\) for a single sensor.

Use the properties of sums to find \(E[X]\).

Use the independence of sensors to find \(\text{Var}(X)\).

Verify your answers using the binomial formulas \(E[X] = np\) and \(\text{Var}(X) = np(1-p)\).

The system triggers an alarm if at least 3 sensors detect the event. Find \(P(X \geq 3)\).

Solution

Part (a): Single sensor (Bernoulli)

Alternatively: \(\text{Var}(B_i) = p(1-p) = 0.90 \times 0.10 = 0.09\)

Part (b): E[X] using linearity

Part (c): Var(X) using independence

Since the sensors are independent:

Part (d): Verify with binomial formulas

\(X \sim \text{Bin}(n=5, p=0.90)\)

Part (e): P(X ≥ 3)

There is a 99.15% probability the alarm will trigger.

Exercise 4: Quality Control Application

A semiconductor manufacturer produces microchips with a 4% defect rate. A batch of 20 chips is randomly selected for testing.

What is the probability that exactly 1 chip is defective?

What is the probability that no chips are defective?

What is the probability that more than 2 chips are defective?

The batch is rejected if 3 or more chips are defective. What is the probability of rejection?

If the defect rate improved to 2%, how would the rejection probability change?

Solution

Let \(X \sim \text{Bin}(n=20, p=0.04)\).

Part (a): P(X = 1)

Part (b): P(X = 0)

Part (c): P(X > 2)

Part (d): P(X ≥ 3) — rejection probability

There is about a 4.4% chance the batch will be rejected.

Part (e): Improved defect rate (p = 0.02)

Let \(Y \sim \text{Bin}(n=20, p=0.02)\).

The rejection probability drops from 4.4% to 0.71% — about 6 times lower!

Exercise 5: Software Testing Application

A software team runs automated tests on each code commit. Historically, 8% of commits introduce a bug that the tests detect.

Over one week, there are 25 commits.

What is the expected number of commits that introduce detected bugs?

What is the standard deviation?

What is the probability that exactly 2 commits introduce bugs?

The team lead gets concerned if 5 or more commits introduce bugs in a week. What is the probability of this happening?

In R, you would calculate part (d) as: 1 - pbinom(4, size = 25, prob = 0.08). Explain why we use 4 instead of 5.

Solution

Let \(X \sim \text{Bin}(n=25, p=0.08)\).

Part (a): Expected value

Part (b): Standard deviation

Part (c): P(X = 2)

Part (d): P(X ≥ 5)

Using complement: \(P(X \geq 5) = 1 - P(X \leq 4)\)

There is about a 4.5% chance of 5 or more buggy commits.

Part (e): Why use 4 in R?

The R function pbinom(q, size, prob) calculates \(P(X \leq q)\).

To get \(P(X \geq 5)\), we need \(1 - P(X \leq 4)\).

pbinom(4, 25, 0.08) gives \(P(X \leq 4)\)

1 - pbinom(4, 25, 0.08) gives \(P(X \geq 5)\)

Using pbinom(5, …) would give \(P(X \leq 5)\), which includes \(P(X = 5)\) — not what we want!

Alternatively: pbinom(4, 25, 0.08, lower.tail = FALSE) directly gives \(P(X > 4) = P(X \geq 5)\).

5.6.7. Additional Practice Problems

True/False Questions (1 point each)

If \(X \sim \text{Bin}(n, p)\), then \(E[X] = np(1-p)\).

Ⓣ or Ⓕ

A binomial random variable counts the number of trials until the first success.

Ⓣ or Ⓕ

For a binomial distribution, the trials must be independent.

Ⓣ or Ⓕ

If \(X \sim \text{Bin}(10, 0.5)\), then the distribution of X is symmetric.

Ⓣ or Ⓕ

Sampling without replacement from a large population can be approximated as binomial.

Ⓣ or Ⓕ

\(\text{Var}(X)\) is maximized when \(p = 0.5\) for a fixed \(n\).

Ⓣ or Ⓕ

Multiple Choice Questions (2 points each)

If \(X \sim \text{Bin}(15, 0.20)\), what is \(E[X]\)?

Ⓐ 0.20

Ⓑ 2.4

Ⓒ 3.0

Ⓓ 12.0

For \(X \sim \text{Bin}(20, 0.3)\), what is \(\text{Var}(X)\)?

Ⓐ 4.2

Ⓑ 6.0

Ⓒ 14.0

Ⓓ 18.0

Which of the following is NOT required for a binomial experiment?

Ⓐ Fixed number of trials

Ⓑ Independent trials

Ⓒ Normally distributed outcomes

Ⓓ Constant probability of success

A fair coin is flipped 6 times. What is \(P(X = 3)\) where X is the number of heads?

Ⓐ 0.1563

Ⓑ 0.3125

Ⓒ 0.5000

Ⓓ 0.6563

Answers to Practice Problems

True/False Answers:

False — \(E[X] = np\). The formula \(np(1-p)\) is for variance, not expected value.

False — Binomial counts the number of successes in n trials. Counting trials until first success is the geometric distribution.

True — Independence is the “I” in the BInS criteria and is essential for binomial.

True — When \(p = 0.5\), the binomial distribution is symmetric around its mean \(np = 5\).

True — When the population is much larger than the sample (rule of thumb: population > 10× sample), the dependence from sampling without replacement is negligible.

True — \(\text{Var}(X) = np(1-p)\). For fixed n, the product \(p(1-p)\) is maximized at \(p = 0.5\) where \(p(1-p) = 0.25\).

Multiple Choice Answers:

Ⓒ — \(E[X] = np = 15 \times 0.20 = 3.0\).

Ⓐ — \(\text{Var}(X) = np(1-p) = 20 \times 0.3 \times 0.7 = 4.2\).

Ⓒ — Binomial outcomes are discrete (0, 1, 2, …, n), not normally distributed. The other three are the BInS criteria (B is implied by having a success probability).

Ⓑ — \(P(X=3) = \binom{6}{3}(0.5)^3(0.5)^3 = 20 \times 0.125 \times 0.125 = 20 \times 0.015625 = 0.3125\).